Why ChatGPT Won’t Become Your Doctor

And actual ways we can use language models in medicine

I saw a headline the other day that said that ChatGPT passed the Step 1 exam, an exam every medical student is required to take after the first two years of medical school before starting clinical rotations. It’s the ultimate exam, combining everything you’ve learned from biochemistry to neuroanatomy to pathology. And as many studies have shown, it’s a terrible indicator of whether someone will actually be a good doctor.

Regardless of that, it was a very catchy headline that of course led to the question: if ChatGPT can pass the Step 1 exam, an exam that requires future doctors to memorize and understand all of the building blocks of medicine, then do we need doctors at all?

A great question - let’s dive in.

What is ChatGPT actually doing?

Before we get into the philosophical question of if an AI could be your doctor, let’s take a minute to understand (in the most basic of ways) how ChatGPT works. Under the hood, ChatGPT is using a language model created by OpenAI called GPT-3 (the marketing department has got some work to do in terms of naming things better I know). This model was trained on a large part of the internet and essentially what it does is take in an input that you provide and predict what should come next based on what it is has seen in the training data.

In more simple terms, the model looks at your text input and predicts the most likely next word or words that should come after it based on what it has seen. You can tweak things to make the outputs slightly variable so you don’t get the same result every time but essentially it’s just completing the sentence for you.

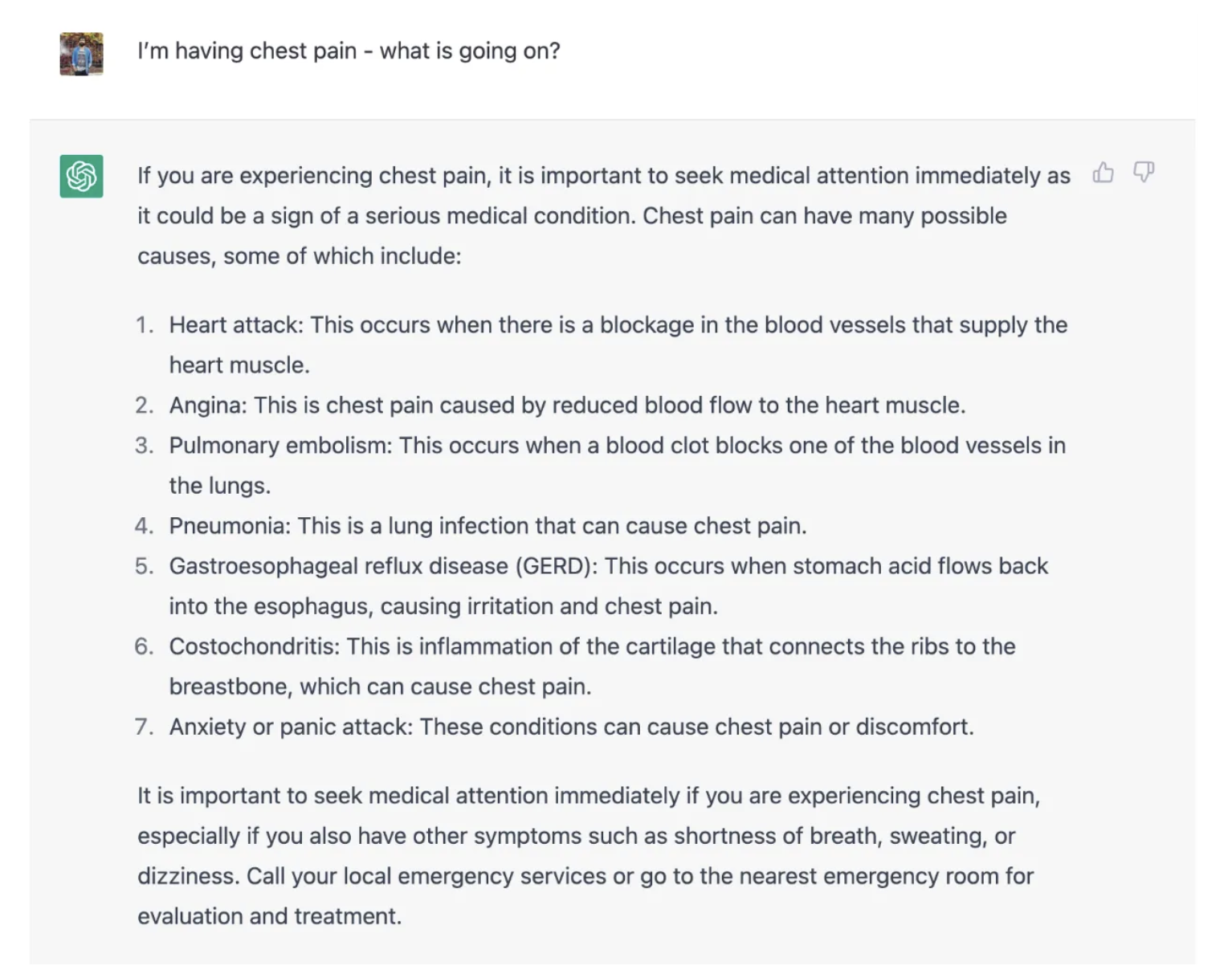

This is incredibly important to understand especially when considering if the AI can diagnose illness. When you give it a list of symptoms and ask what something could be, it’s not doing a calculation of what it thinks it could be. Instead, it’s going through all the data that it was trained on and asking what is the most logical word or words that should come next. This ultimately forms a sentence of what it thinks it should say to your question of “I’m having chest pain - what is going on?”

This sounds like it’s thinking and coming up with a list but it’s really just predicting the next word!

Though it may look like ChatGPT just came up with a list of 7 things that are likely to cause chest pain, it has no idea what this list is. It is just stringing together words that should come one after another based on the probability it has seen in the training data. This is why there have been reports of it messing up with simple arithmetic and making up research studies that don’t exist. It’s not thinking, it’s adding words to previous words based on statistics.

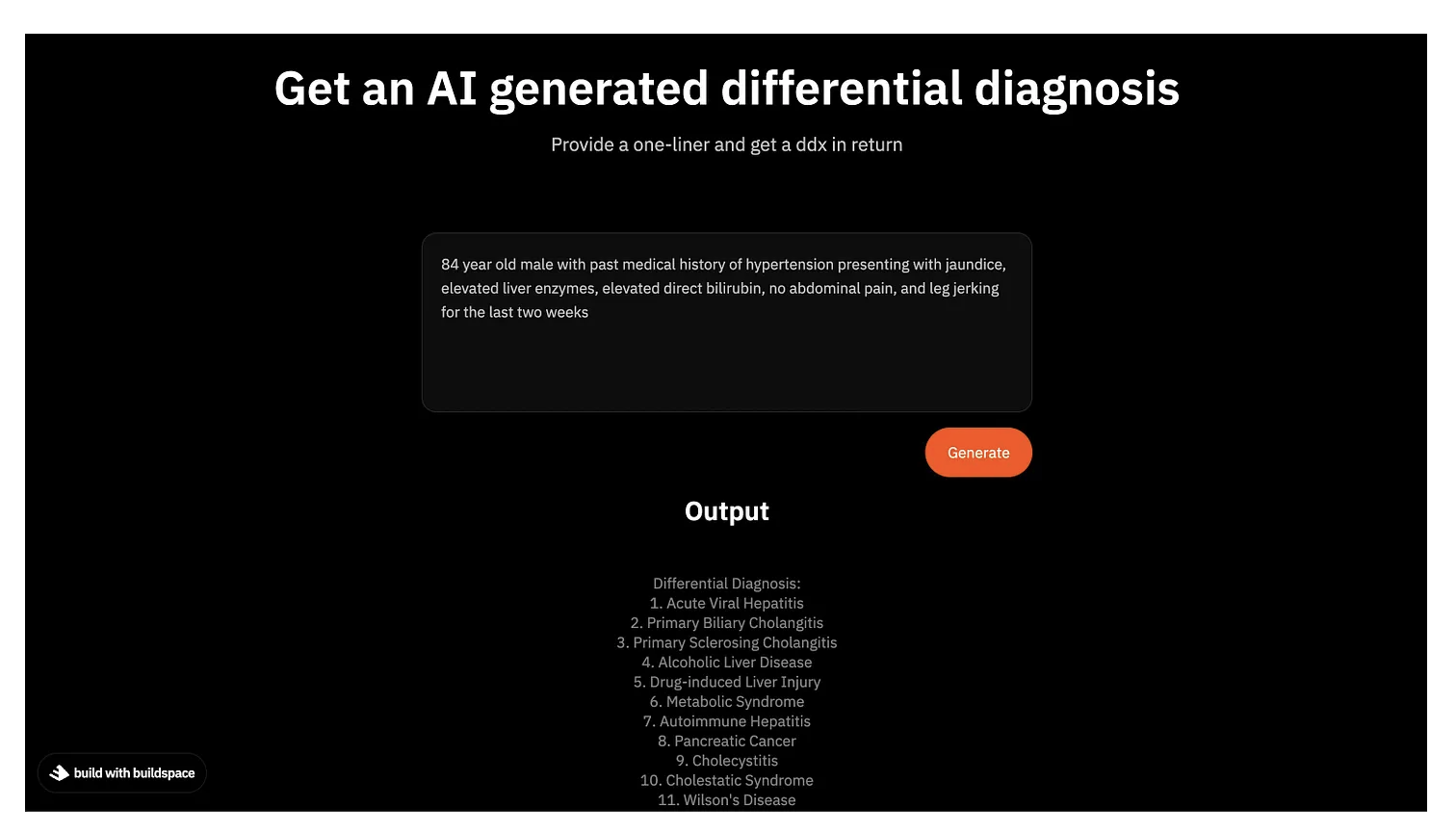

I actually built a fun tool while on my night medicine rotation when I had some downtime (LOL) that could take in a one-liner of a patient and output a differential diagnosis of what might be going on. This was a fun exercise to do before I went to go see a new patient in the ED just to see how the AI would compare to what we were thinking.

Give it a one-liner and get a differential diagnosis using the OpenAI API

It’s a great demo - but that’s all it really is. And the reason is simple: medical diagnosis isn’t that difficult. We see it all the time in shows like House MD where there is a big medical mystery and they need a genius doctor to put together what is going. But in the real world, when someone comes in with chest pain, abdominal pain, altered mental status, or a funky heart rhythm we can pretty quickly figure out what is going on. Sure sometimes there are things that don’t fully make sense or where the data that we have from imaging and physical exam and labs don’t add up and we have to do a little more thinking. But these are what we call “zebras” in medicine, and the biggest thing that is different about real medicine vs TV medicine (and medical school exams for that matter) is that when you see a patient and their symptoms, you should think about horses not zebras.

How can we use ChatGPT or language models for in medicine?

Though ChatGPT isn’t going to the be next Dr. House (and we don’t really need it to be), there are still some areas where language models like this can be useful for improving the efficiency of the care that we currently deliver.

Text Generation

One of the most annoying parts about medicine is all the paperwork. There are so many times when I’m in the clinic and have to write a letter for authorization of a service or to a DME company because a patient needs a new walker or blood pressure machine. We have templates for this already in our EMR, but there is still the manual process of filling in these templates for a given patient based on their specific condition. Many times, I’m doing this for patients of other doctors that I’m covering so I may not even know the full history of the patient. This requires me to dig through their chart for their medical history, medications, and why they need a specific service or piece of equipment. This probably isn’t the best use of a clinician’s time.

Having a ChatGPT-like tool to help with this could be a huge lift for physicians and also the back office in general. The AI could take in the data from a patient’s chart and completely fill out a template appropriately so that a human would just have to review it before sending. Doximity is already testing this out with their latest DocsGPT product and though it is still early and would be better integrated into the actual EMR, it’s a good proof of concept.

Chart Review

This is by far the most interesting use case in my opinion. One thing that actually is really hard to do in medicine is get a sense of a patient’s complete history in a short amount of time. When someone comes into the hospital, you want to know their medical history, what medications they take, and what got them to you in the first place. This process typically involves a lot of “chart review” which is just a nice way to say that you go through old notes that other providers have written to try and figure out what is going on. Sometimes this is easy because the case is straightforward, but many times I see patients who have multiple hospitalizations and ER visits and are followed by lots of specialists outpatient. This means opening up the EMR to hundreds of notes and trying to figure out the relevant ones (God forbid I have to use Epic search to find a specific piece of information).

Language models could be super helpful here. It would be great if a model could be trained on a patient’s chart and I could essentially ask the AI questions like - “What medications has this patient been on for hypertension and over what time course” or “What was the reason this patient got a pacemaker 5 years ago and what led them to that?”

The big thing to note here is that I’m not asking the AI to come up with new information based on the inputs I’m giving it (which is what asking an AI to diagnose symptoms is) but rather I’m having it synthesize and summarize a large amount of unstructured data (medical notes) to get me to a conclusion faster than I can go through the unstructured data myself.

Final Thoughts

As a tool, ChatGPT is fun to play with and even useful in a number of domains, but its still in its infancy. It’s important to remember, however, that the major use case of language models isn’t going to be replacement but rather augmentation of the work done by providers in healthcare. It can make things faster and easier by being trained on vast amounts of unstructured data but the notion that it could replace your doctor is a silly one. Not because doctors are almighty intellectual beings (though if you’ve ever talked to a cardiologist, they certainly think they are) but actually because diagnosis is definitely not the hardest part of medicine.

We’re going to see a lot more use cases for language models in areas of healthcare like back office work, billing, and document generation, and maybe we’ll even see adoption by EHRs to help providers better engage with the large amount of unstructured data that has to be parsed through when taking care of a patient. Of course, there are lots of financial implications for this and the cost of running these models is no joke, especially for an industry that is known to not always spend money efficiently (we’ll save that topic for another day). For now, I wouldn’t jump to the conclusion that just because ChatGPT can pass the Step 1 exam it’s ready to become your doctor.